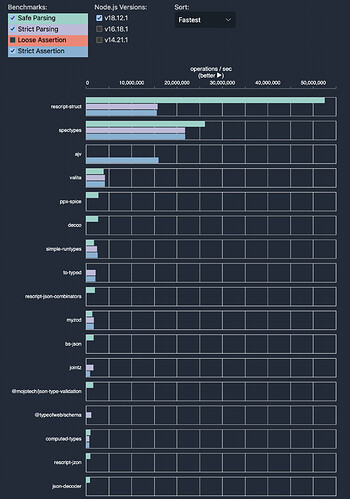

In the benchmark libraries need to decode an object to data record. It has a nested record and some primitive fields. More times the object decoded is better.

type nested = {foo: string, num: float, bool: bool}

type data = {

number: float,

negNumber: float,

maxNumber: float,

string: string,

longString: string,

boolean: bool,

deeplyNested: nested,

}

By my observation an object allocation results in ~250_000 ops/s decrease in performance in this kind of benchmark. While an additional if statement or a function call during a field decoding would cost between 50_000 and 100_000 ops/s.

In this case decco is faster because it generates a decode function in compile time with inlined logic of applying decoders for fields. But actually, the question should be why decco is only a few percent faster than other libraries, that combine decoders in runtime without an ability to inline everything.

For instance, if rescript-json-combinators didn’t create the type fieldDecoders = {optional, required} record every time decoding an object, it could be even faster than current decco. This is two object allocations, so about 500_000 ops/s improvement in the benchmark.

Some libraries like rescript-jzon use Js.Json | ReScript API under the hood, which creates additional overhead. Theoretically, it should be as fast as rescript-struct.

While my rescript-struct has a declarative API compared to rescript-json-combinators, making it almost impossible to improve performance even a little bit more (in the current design). There are some unavoidable function calls and iterators.

So if you need to be real fast, you need to generate a decoding function beforehand with everything inlined. That’s possible to do either in compile time like decco does, or in runtime with eval/new Function that nobody does, but I’m thinking about trying to do so

And the problem with decco, is that it uses Js.Json | ReScript API and doesn’t inline a lot of code that’s possible to inline. For example changing all Belt_Option.getWithDefault(Js_dict.get(dict$1, "fieldName"), null) in the deccos generated decoder to dict$1["fieldName"] ?? null increased performance from 3_200_122 ops/s to 19_841_462 ops/s on my local machine. You can take a look at the spectypes benchmark, decco might be as fast.

But all of these are micro-optimizations, 1_000_000 ops/s is already very fast, and sometimes it might be better to invest time in a more convenient API, than increasing some benchmark numbers.