It seems like Belt doesn’t have a uniqueBy function. I could use a Set then transform it back to an Array, but that only works if the whole record is unique. I could bind to lodash or write a hacky reduce but I was wondering if there was a better way I wasn’t thinking of.

Not sure what you mean here.

Also, give us a concrete use-case! Always interested in those.

I don’t know if ReScript provides access to a JS Map yet, but would a Dict work for you?

I’m assuming you have an array of objects and you want to unique them by one of the object properties?

E.g.

type person = {

id: int,

name: string,

}

let uniqueById = persons =>

persons

->Js.Array2.map(person => (Js.Int.toString(person.id), person))

->Js.Dict.fromArray

->Js.Dict.values

let persons = [{id: 1, name: "One"}, {id: 2, name: "Two"}, {id: 1, name: "Three"}]

let uniquePersons = uniqueById(persons)

Hi,

In one of my projects, i extend Js.Array2 module and add distinctBy function like below.

Guess it’s what you are looking for.

module JsArray2Ex = {

include Js.Array2

let distinctBy: (t<'a>, 'a => 'b) => t<'a> = (arr, projection) => {

let result: t<'a> = []

let keys: t<'b> = []

let len = arr->length

for i in 0 to len - 1 {

let key = projection(arr[i])

switch keys->findIndex(x => x == key) {

| -1 => {

result->push(arr[i])->ignore

keys->push(key)->ignore

}

| _ => ()

}

}

result

}

}

// test

@unboxed type rec any = Any('a): any

let r =

[Any(2), Any(2), Any("two"), Any("two")]->JsArray2Ex.distinctBy(x => x) == [

Any(2),

Any("two"),

]

Js.log(r) // true

Regards,

Jazz

Assuming you don’t care about order, and you have no additional structure in your array, you can sort the array by a compare in O(n lg n) time and then pick out unique elements easily in O(n) time. It can be quite effective. If you have frequent updates to the array, it may also be efficient if the sort function you use is smooth.

In some cases you want groupBy over uniqueBy. You simply group elements in subarrays under the compare. This allows you to reconcile values which are supposed to be distinct. If your sort is stable, you can now also handle the subtle point of who wins the uniqueness competition: first occurence? last occurrence? random element? result of a monoidic operation? etc.

^ pretty much this, thus knowing the more concrete use-case. It seems massaging the data back and forth would be much worse for the perf and UX than just keep the ordering.

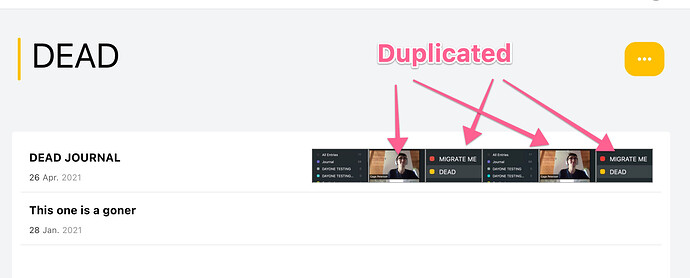

My usecase is basically I have these images that could be duplicated by the mobile app.

So it’s always going to be smaller than like 30 photos, but I just wanted to de-duplicate them by id.

Thanks @jazz and others for theirs solutions  .

.

30^2 is 900 operations btw. I’d recommend either just dumping into a set and back, or sort then deduplicate siblings. In fact I can’t think of a situation where it’s better to implement distinct through a linear search in a loop vs sort + dudupe siblings.

Though the real solution here might be to not have these duplications in the first place! Aka keep your photos ordering and binary search to insert or not.

Two ideas you might use:

One is to simply store the data as a Map indexed by the identity. Uniqueness is then simply a variant of handling presence in the map. Rendering is handled by reducing the map into something amenable, for instance React.element.

Another solution is to track a mask. It can be quite nice if you use the module system. The mask works like a 2ndary unique index on the data.

The core idea is that tracking a secondary index might be troublesome in the code in general. You might forget to keep it up-to-date. So you mint a new type, UniqArray.t<'a> where the only way to manipulate this type is through functions which maintain the invariant you want.

This “shifts” the problem so it isn’t handled at the time at which you render the data, but at the time where you insert the data into your data-structure. Because you know the array is already de-duplicated by means of the mask, rendering is just getting the underlying array.

Your code is then rewritten to use the new type all over the place.

Complexity is still the same. Maintaining uniqueness is O(lg n) and you need to do this for each element inserted, so you are still looking at O(n lg n).

Another thing to think a bit about is how immutable you want the data structure, as the following isn’t that nice in that department.

(code isn’t tested at all, but the core idea should be obvious and obtainable)

open Belt

module type UniqArray = {

type t<'a>

let make: unit => t<'a>

let add: (t<'a>, 'a, int) => option<t<'a>>

let toArray: t<'a> => array<'a>

}

module UniqArray: UniqArray = {

type t<'a> = {

arr: array<'a>,

mask: Set.Int.t,

}

let make = () => {arr: [], mask: Set.Int.empty}

let add = (ua, elem, id) => {

switch Set.Int.has(ua.mask, id) {

| true => None

| false => {

ignore(Js.Array.push(elem, ua.arr))

Some({...ua, mask: Set.Int.add(ua.mask, id)})

}

}

}

let toArray = ua => Js.Array.copy(ua.arr)

}

Addendum for those not familiar with this: Belt’s non-hashed data structures are already “sorted” under the hood by using a binary tree.